Views expressed here are solely that of my own. Please make sure that you test the code/queries that appear on my blog before applying them to the production environment.

Saturday, December 29, 2007

Birth of a New Oracle User Group (AIOUG)

A new Oracle user group takes birth and this time it’s in India. The new group is named All India Oracle User Group. I think this a very nice initiative taken by Murali Vallath and his team to come up with this idea. This has been long awaited and it will really help Oracle users in India to communicate and network with each other and share their experience.

I have registered myself with the group.

I wish the AIOUG team a very success.

Tuesday, December 18, 2007

Inconsistency between DBA_DATA_FILES, DBA_FREE_SPACE, and DBA_SEGMENTS views

Dear all,

A question has been raised on Technet forum that inconsistent information is being returned from Oracle dictionary views. OP is trying to relate used/free bytes returned by these dictionary views.

Information provided by OP:

Total bytes occupied by data files is: 11,010,048,000

Total bytes available are: 220,200,960

Total bytes used by objects: 10,989,076,480

Free Space + Used Space = 220,200,960 + 10,989,076,480 = 11,209,277,440

So, the sum of “Free Space” and “Used Space” is more than the “Total Space” available to the datafiles. The question is “How can I get more space than the existing one?”

Answer to the question goes here:

I have TEST user in my database and the default tablespace of this user is TEST_TS. I create couple of tables in this schema:

SQL> conn test/test Connected. SQL> SQL> select * from tab; TNAME TABTYPE CLUSTERID ------------------------------ ------- ---------- EMPDATA TABLE TEST_ERRORS TABLE SQL> create table test1 as select * from tab; Table created. SQL> create table test2 as select * from tab; Table created. SQL> create table test3 as select * from tab; Table created. SQL> select * from tab; TNAME TABTYPE CLUSTERID ------------------------------ ------- ---------- TEST1 TABLE TEST2 TABLE TEST3 TABLE EMPDATA TABLE TEST_ERRORS TABLENow, I will drop these newly created tables:

SQL> drop table test1; Table dropped. SQL> drop table test2; Table dropped. SQL> drop table test3; Table dropped.Now, query the dictionary views for used, free and allocated bytes/blocks information:

SQL> select blocks from dba_data_files where tablespace_name = 'TEST_TS'; BLOCKS ---------- 3712 SQL> select sum(blocks) from dba_free_space where tablespace_name = 'TEST_TS'; SUM(BLOCKS) ----------- 3680 SQL> select sum(blocks) from dba_segments where tablespace_name = 'TEST_TS'; SUM(BLOCKS) ----------- 48Opps, we get more space (3680 + 48 = 3728) than being allocated to the datafiles. Probably, by now you might have arrived to the answer, but let me reveal it to you. OP hasn’t mentioned the Oracle database version but I am pretty sure it’s Oracle 10g or above. With Oracle database 10g, Oracle has added a new feature of recycle bin. When you drop an object it goes and stays in your recycle bin and will occupy the same amount of space. You need to purge the object to reclaim the space. This is what is happening in this case. Dropped objects are still lying in the TEST_TS tablespace and being counted by the DBA_SEGMENTS view, whereas, DBA_FREE_SPACE correctly report the free space available. Let me purge the dropped objects out of the recycle bin and rerun the queries:

SQL> show recyclebin ORIGINAL NAME RECYCLEBIN NAME OBJECT TYPE DROP TIME ---------------- ------------------------------ ------------ ------------------- TEST1 BIN$BEExbY8xS0aXH3U+e9XPDg==$0 TABLE 2007-12-18:13:42:36 TEST2 BIN$WGV0P4B4TaCUukiPyctTPg==$0 TABLE 2007-12-18:13:42:38 TEST3 BIN$1P4aTA1IR8ijw4btdRkmzw==$0 TABLE 2007-12-18:13:42:39 SQL> purge recyclebin; Recyclebin purged. SQL> select blocks from dba_data_files where tablespace_name = 'TEST_TS'; BLOCKS ---------- 3712 SQL> select sum(blocks) from dba_free_space where tablespace_name = 'TEST_TS'; SUM(BLOCKS) ----------- 3680 SQL> select sum(blocks) from dba_segments where tablespace_name = 'TEST_TS'; SUM(BLOCKS) ----------- 24Wow, Oracle now reports correctly. The sum of “Free Space” and “Used Space” (3680 + 24 = 3704) is less than the “Total Space” (3712) available to the datafiles. Regards

Tuesday, December 04, 2007

Issues with COMPATIBLE Initialization Parameter

Hello,

I was trying to transport a tablespace from one database (Prod_A) to another (Prod_B), both of which are running on Oracle 10g Release 2 (10.2.0.3). I took metadata export and ftp'ed both export dump and datafiles to the destination database server. While trying to import into Prod_B, I received "ORA-00721: changes by release 10.2.0.3.0 cannot be used by release 10.2.0.0.0" error.

I cross checked version of both the databases by querying V$VERSION and found that both were on 10.2.0.3, but still Oracle complains about different versions. Then, I had a look at COMPATIBLE initialization parameter of both the databases. This parameter was set to "10.2.0.3" on Prod_A, but on Prod_B it was "10.2.0". I changed this parameter on Prod_B and bounced database. Everything went fine and I was able to import tablespace into Prod_B.

Following is the import log where import terminated unsuccessfully.

Import: Release 10.2.0.3.0 - Production on Mon Dec 3 13:01:09 2007

Copyright (c) 1982, 2005, Oracle. All rights reserved.

Connected to: Oracle Database 10g Enterprise Edition Release 10.2.0.3.0 - 64bit Production

With the Partitioning, OLAP and Data Mining options

Export file created by EXPORT:V10.02.01 via conventional path

About to import transportable tablespace(s) metadata...

import done in AR8MSWIN1256 character set and UTF8 NCHAR character set

import server uses AR8ISO8859P6 character set (possible charset conversion)

export client uses AR8ISO8859P6 character set (possible charset conversion)

. importing SYS's objects into SYS

. importing SYS's objects into SYS

IMP-00017: following statement failed with ORACLE error 721:

"BEGIN sys.dbms_plugts.checkCompType('COMPATSG','10.2.0.3.0'); END;"

IMP-00003: ORACLE error 721 encountered

ORA-00721: changes by release 10.2.0.3.0 cannot be used by release 10.2.0.0.0

ORA-06512: at "SYS.DBMS_PLUGTS", line 2004

ORA-06512: at line 1

IMP-00000: Import terminated unsuccessfully

Regards

Thursday, November 22, 2007

Oracle OpenWorld 2007 Presentations Available for Download

Hi,

For those who have missed OOW-2007 (like me), here is the chance to go through the presentations. Presentations are available on virtually all topics for download, but unfortunately, Tom Kyte's presentation is not downloadable.

Although there are many presentations available, I started reading Cary Millsap's "Why You Can't See Your Real Performance Problems" presentation.

Here is the link:

http://www28.cplan.com/cc176/catalog.jsp

Moreover, the username and password to download the presentations are available at the top of the page for our convenience.

You may also like to visit http://www.oracle.com/openworld/2007/keynotes.html.

Happy reading !!!!

Sunday, November 18, 2007

ORA-12537 TNS: Connection Closed

Dear readers,

When I was trying to establish client connection to one of our databases running on Oracle 10g, I received “ORA-12537 TNS: Connection Closed” error.

Upon investigation, I came across “TCP.VALIDNODE_CHECKING” parameter in “sqlnet.ora” file. This was set to “YES”. I simply commented this parameter and every thing went fine. Clients were able to establish connection to the database.

By setting TCP.VALIDNODE_CHECKING to “YES”, you inform Oracle database to allow/deny connections to the listed nodes. You may list the invited nodes using TCP.INVITED_NODES parameter in sqlnet.ora file or, alternatively, you may use the TCP.EXCLUDED_NODES parameter to disallow database connections from the listed nodes.

Hope you find this information helpful!!!

Monday, November 12, 2007

Manually Install/De-Install Database Options in Oracle Database 10gR2

Hi,

When a new database is created using DBCA, by default some of the common database options are installed like Oracle JVM and Oracle Text. But, when you manually create the database none of them get installed. You need to manually install them.

I thought of sharing Metalink Note Id’s for some of the Common Database Options which you can manually install/de-install once database has been created:

Oracle JVM

Note:276554.1 How to Reload the JVM in 10.1.0.X and 10.2.0.X

Oracle Text

Note:280713.1 Manual installation, deinstallation of Oracle Text 10gR1 and 10gR2

Oracle XML DB

Note:243554.1 How to Deinstall and Reinstall XML Database (XDB)

Oracle Multimedia

Installation, Upgrade and Downgrade information can be found in the following Manual: Oracle® interMedia

User's Guide 10g Release 2 (10.2) Appendix B Installing and Upgrading Oracle interMedia

Oracle OLAP

Note:296187.1 How To Manually Install Oracle OLAP into a 9i or 10g Database After the DB Has Been Created

Oracle Spatial

Note:270588.1 Steps for Manual Installation / Verification of Spatial 10g

Note:1070647.1 How to Deinstall Oracle Spatial keeping Oracle Locator

Oracle Label Security

Note:171155.1 How to Install / Deinstall Oracle Label Security Oracle9i/10g

Sample Schemas

Note:340112.1 How To Install Sample Schemas in 10g?

Enterprise Manager Repository

Note:114763.1 How to Create Enterprise Manager 2.1 Repository Using EMCA

Oracle Application Express

Note:445205.1 How to download and install Application Express / HTMLDB

Oracle Warehouse Builder

Note:459961.1 How to Install Warehouse Builder Repository(10.2.0.1.31) on a RAC Database on Windows and UNIX

Oracle Database Vault

Note:445092.1 How to Install Database Vault Patches on top of 10.2.0.3

Oracle Database Extensions for .NET

Note:374820.1 How to Install the Oracle Database Extensions for .NET (ODE.NET)

Oracle Data Mining

Note:297551.1 How to remove the Data Mining Option from the database

Hope you find this document helpful :)

When a new database is created using DBCA, by default some of the common database options are installed like Oracle JVM and Oracle Text. But, when you manually create the database none of them get installed. You need to manually install them.

I thought of sharing Metalink Note Id’s for some of the Common Database Options which you can manually install/de-install once database has been created:

Oracle JVM

Note:276554.1 How to Reload the JVM in 10.1.0.X and 10.2.0.X

Oracle Text

Note:280713.1 Manual installation, deinstallation of Oracle Text 10gR1 and 10gR2

Oracle XML DB

Note:243554.1 How to Deinstall and Reinstall XML Database (XDB)

Oracle Multimedia

Installation, Upgrade and Downgrade information can be found in the following Manual: Oracle® interMedia

User's Guide 10g Release 2 (10.2) Appendix B Installing and Upgrading Oracle interMedia

Oracle OLAP

Note:296187.1 How To Manually Install Oracle OLAP into a 9i or 10g Database After the DB Has Been Created

Oracle Spatial

Note:270588.1 Steps for Manual Installation / Verification of Spatial 10g

Note:1070647.1 How to Deinstall Oracle Spatial keeping Oracle Locator

Oracle Ultra Search

Note:337992.1 How to Manually Install and Uninstall Ultra Search 10gR2 Server Components

Oracle Label Security

Note:171155.1 How to Install / Deinstall Oracle Label Security Oracle9i/10g

Sample Schemas

Note:340112.1 How To Install Sample Schemas in 10g?

Enterprise Manager Repository

Note:114763.1 How to Create Enterprise Manager 2.1 Repository Using EMCA

Oracle Application Express

Note:445205.1 How to download and install Application Express / HTMLDB

Oracle Warehouse Builder

Note:459961.1 How to Install Warehouse Builder Repository(10.2.0.1.31) on a RAC Database on Windows and UNIX

Oracle Database Vault

Note:445092.1 How to Install Database Vault Patches on top of 10.2.0.3

Oracle Database Extensions for .NET

Note:374820.1 How to Install the Oracle Database Extensions for .NET (ODE.NET)

Oracle Data Mining

Note:297551.1 How to remove the Data Mining Option from the database

Thanks Gerrit for Oracle Data Mining & Oracle Spatial MOS document id's.

Hope you find this document helpful :)

Saturday, October 27, 2007

Oracle 11g for Windows is available for download

Dear all,

Most awaited Windows release of Oracle 11g is now available for download. You may download it from here:

http://www.oracle.com/technology/software/products/database/index.html

Wishing you all a happy download!!

Regards

Monday, October 01, 2007

"1Z1-050: Oracle Database 11g New Features" Beta Exam declared

Oracle University has announced beta exam of "Oracle Database 11g New Features". As usual, the price of this beta exam is also $50.

Well, beta exams are really time consuming and this one is for more than 3 hours (190 minutes). So, get your coffee ready before proceeding to the exam.

More information can be obtained from the below URL:

http://education.oracle.com/pls/web_prod-plq-dad/db_pages.getpage?page_id=41&p_exam_id=1Z0_050

Note: 30 November, 2007 is the Exam Close date. Hurry ....

My best wishes for all beta exam takers !!!

Monday, September 24, 2007

Use of Oracle Sequences in PL/SQL Expressions

In this post, I will be discussing yet another new feature of Oracle database 11g. Well, this new feature is related to Oracle Sequences.

With Oracle Database 11g, we can use SEQUENCE.NEXTVAL in PL/SQL expression instead of writing a SELECT statement. Here is an example:

Using SEQUENCE.NEXTVAL in PL/SQL assignment simply enhances readability, but, under the covers, Oracle rewrites the assignment into a SELECT statement. To verify the same, I traced a small PL/SQL block using 10046 trace as shown below:

Using SEQUENCE.NEXTVAL in PL/SQL assignment simply enhances readability, but, under the covers, Oracle rewrites the assignment into a SELECT statement. To verify the same, I traced a small PL/SQL block using 10046 trace as shown below:

Following is an extract from the trace file:

As you may see, Oracle has re-written the expression into equivalent SQL statement. Performance wise, this new feature does not give any benefit but the advantage we get is readability.

Thanks for reading :)

Tuesday, September 18, 2007

Configure Controlfile Autobackup’s

Hi,

You can configure automatic backup of your control file and server parameter file (SPFILE) using RMAN. RMAN backs up control file and SPFILE (if used to start the database) in one of two situations:

1) when a successful backup must be recorded in the RMAN repository, and

2) when a structural change to the database affects the contents of the control file which therefore must be backed up. Structural changes to database include: adding a new tablespace, altering the state of a tablespace or datafile, renaming a file, and so on.

The server process is responsible for performing control file autobackup when there is any structural change.

By default, control file autobackups are turned off. Control file autobackups can be turned on using the following command in RMAN:

C:\> rman target / RMAN> CONFIGURE CONTROLFILE AUTOBACKUP ON; old RMAN configuration parameters: CONFIGURE CONTROLFILE AUTOBACKUP OFF; new RMAN configuration parameters: CONFIGURE CONTROLFILE AUTOBACKUP ON; new RMAN configuration parameters are successfully storedWe may set the directory and format of backup files using the following RMAN command:

RMAN> CONFIGURE CONTROLFILE AUTOBACKUP FORMAT FOR DEVICE TYPE DISK TO 'C:\db10g\cfbackup\db10g_%F'; new RMAN configuration parameters: CONFIGURE CONTROLFILE AUTOBACKUP FORMAT FOR DEVICE TYPE DISK TO 'C:\db10g\cfbackup\db10g_%F'; new RMAN configuration parameters are successfully storedAll autobackup formats must include %F variable. %F expands to “C-XXXXXXXXX-YYYYMMDD-NN”, where: XXXXXXXXX – database id YYYYMMDD – day, when backed up NN – change number during day, starts with 00, and represented in hexadecimal At this point, my “C:\db10g\cfbackup”directory is empty:

C:\db10g\cfbackup>dir Volume in drive C is SYSTEM Volume Serial Number is AC21-0462 Directory of C:\db10g\cfbackup 18/09/2007 11:02 AM . 18/09/2007 11:02 AM .. 0 File(s) 0 bytes 2 Dir(s) 4,451,540,992 bytes freeTo test the control file autobackup, I will add a new tablespace:

SQL> create tablespace dropme datafile 'C:\db10g\cfbackup\dropme.dbf' size 10m; Tablespace created.The above command should trigger an autobackup of controlfile, let me see if any file is created in “C:\db10g\cfbackup” folder:

C:\db10g\cfbackup>dir Volume in drive C is SYSTEM Volume Serial Number is AC21-0462 Directory of C:\db10g\cfbackup 18/09/2007 12:19 PM. 18/09/2007 12:19 PM.. 18/09/2007 12:19 PM 7,340,032 DB10G_C-15597741-20070918-02 18/09/2007 12:19 PM 10,493,952 DROPME.DBF 2 File(s) 17,833,984 bytes 2 Dir(s) 4,433,707,008 bytes freeAs we may see, a file "DB10G_C-15597741-20070918-02" is created. This is the autobackup of control file and SPFILE performed by the server process. At the same time, the database writes a message containing the complete path of the backup piece and device type to the alert log.

Tue Sep 18 12:19:37 2007 create tablespace dropme datafile 'C:\db10g\cfbackup\dropme.dbf' size 10m Tue Sep 18 12:19:37 2007 Starting control autobackup Control autobackup written to DISK device handle 'C:\DB10G\CFBACKUP\DB10G_C-15597741-20070918-02' Completed: create tablespace dropme datafile 'C:\db10g\cfbackup\dropme.dbf' size 10mControl file autobackups can be turned off anytime using “CONFIGURE CONTROLFILE AUTOBACKUP OFF” command in RMAN. Conclusion: With a control file autobackup, RMAN can recover database even if the current control file, recovery catalog, and server parameter file are inaccessible. Regards

Saturday, September 15, 2007

An Unusual Export Error

Hi,

A full database export was completing with the following errors in one of our UAT database (Oracle 10g Release 2):

EXP-00008: ORACLE error 1031 encountered ORA-01031: insufficient privileges ORA-06512: at "SYS.DBMS_EXPORT_EXTENSION", line 257 ORA-06512: at line 1 EXP-00078: Error exporting metadata for index TB_SDN_EXT_IDX. Index creation will be skippedStrange thing to note is the error message “ORA-01031: insufficient privileges” and the error is raised only for indexes of type “DOMIAN”. The user executing export has “EXP_FULL_DATABASE” and “IMP_FULL_DATABASE” roles granted. We have been encountering these errors only after installing “Oracle Text” option, before installing “Oracle Text”, the exports were running successfully. To resolve the problem we have to explicitly grant “SELECT ANY TABLE” privilege to the exporting user. Well, it’s logical that when a user has “EXP_FULL_DATABASE” role granted there is no need to grant “SELECT ANY TABLE” privilege. But still, that solved our problem. Regards

Thursday, September 06, 2007

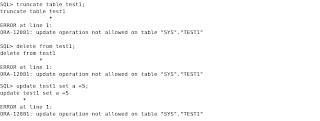

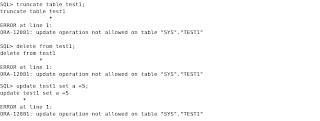

Read-Only Tables in Oracle 11g

In pre-Oracle 11g, one option to place a table in read-only mode is to create a trigger on that table that raises an exception on INSERT, UPDATE, and DELETE. In Oracle Database 11g, you can place a table in read-only mode by merely executing ALTER TABLE…READ ONLY statement.

Following is a simple test:

I create a test table “TEST1” and insert a dummy record.

Using the ALTER TABLE …READ ONLY statement, I place “TEST1” table in read-only mode:

Using the ALTER TABLE …READ ONLY statement, I place “TEST1” table in read-only mode:

Once a table is marked as read-only, all DML transactions are protected against that table.

Once a table is marked as read-only, all DML transactions are protected against that table.

Any attempt to modify the “TEST1” table results in ORA-12081 error.

You can find out whether a table is in read-only mode by querying “READ_ONLY” column of “USER_TABLES” view. A value of “YES” indicates that a table is read-only while a value of “NO” indicates a table is in read-write mode.

Any attempt to modify the “TEST1” table results in ORA-12081 error.

You can find out whether a table is in read-only mode by querying “READ_ONLY” column of “USER_TABLES” view. A value of “YES” indicates that a table is read-only while a value of “NO” indicates a table is in read-write mode.

At any time, you may place the table back in read-write mode using ALTER TABLE…READ WRITE statement.

At any time, you may place the table back in read-write mode using ALTER TABLE…READ WRITE statement.

Read Oracle Database 11g Administrators Guide, for a complete list of allowed and barred operations on read-only tables.

Read Oracle Database 11g Administrators Guide, for a complete list of allowed and barred operations on read-only tables.

Using the ALTER TABLE …READ ONLY statement, I place “TEST1” table in read-only mode:

Using the ALTER TABLE …READ ONLY statement, I place “TEST1” table in read-only mode:

Once a table is marked as read-only, all DML transactions are protected against that table.

Once a table is marked as read-only, all DML transactions are protected against that table.

Any attempt to modify the “TEST1” table results in ORA-12081 error.

You can find out whether a table is in read-only mode by querying “READ_ONLY” column of “USER_TABLES” view. A value of “YES” indicates that a table is read-only while a value of “NO” indicates a table is in read-write mode.

Any attempt to modify the “TEST1” table results in ORA-12081 error.

You can find out whether a table is in read-only mode by querying “READ_ONLY” column of “USER_TABLES” view. A value of “YES” indicates that a table is read-only while a value of “NO” indicates a table is in read-write mode.

At any time, you may place the table back in read-write mode using ALTER TABLE…READ WRITE statement.

At any time, you may place the table back in read-write mode using ALTER TABLE…READ WRITE statement.

Read Oracle Database 11g Administrators Guide, for a complete list of allowed and barred operations on read-only tables.

Read Oracle Database 11g Administrators Guide, for a complete list of allowed and barred operations on read-only tables.

Birla Institute of Technology and Science (BITS), Pilani

Dear all,

Recently, Outlook Magazine has ranked BITS Pilani as number ONE engineering college in India. BITS Pilani has two campuses in India and one in Dubai.

I am one of the student of the most prestigious engineering college of India. I did my Master of Science (MS) degree in "Software Systems" from BITS-Pilani.

Read what Wikipedia’s has to say about BITS-Pilani.

Regards

Tuesday, August 28, 2007

Installing Oracle Database 11g on Oracle Enterprise Linux 5

Dear readers,

Yesterday, I successfully installed Oracle Enterprise Linux 5 and Oracle Database 11g. The installation was very smooth without any problems. You may download Oracle Enterprise Linux 5 from here and Oracle Database 11g from here.

You may find this document to be very helpful while installing Oracle Enterprise Linux 5. Although, “welcome.html” file is very handy in guiding you through the database installation but this guide lists all the instructions specifically required to install Oracle Database 11g on Oracle Enterprise Linux 5.

Well, there are many new features which I would like to test.

Regards

Saturday, August 25, 2007

SQL Functions Revisited

I was reading “SQL Reference” manual and came across some interesting SQL functions which I thought of discussing here.

COALESCE

COALESCE function accepts a varying length list of arguments and returns the first non-NULL value/expression in the list. Oracle uses short-circuit evaluation, i.e., it starts scanning from the left for the first not null value and immediately terminates upon finding the first not-null occurrence instead of scanning all the expressions is the list.

You can use COALESCE as a variety of the CASE expression. For example,

COALESCE( expr1, expr2)

is equivalent to:

CASE WHEN expr1 IS NOT NULL THEN expr1 ELSE expr2 END

SQL> select coalesce(null, null, null, null,15) from dual;

COALESCE(NULL,NULL,NULL,NULL,15)

--------------------------------

15

SQL> select coalesce(null, 20, null, 1/0, null) from dual;

COALESCE(NULL,20,NULL,1/0,NULL)

-------------------------------

20

Instead of running into error (ORA-01476: divisor is equal to zero) for the above SQL, the query returns 20 as the output. This is because Oracle immediately stops searching the list when it finds the first non-null value.

EXTRACT

EXTRACT function returns the value of a specified datetime field from a datetime expression.

SQL> select extract(month from sysdate) from dual;

EXTRACT(MONTHFROMSYSDATE)

-------------------------

8

SQL> select extract(year from sysdate) from dual;

EXTRACT(YEARFROMSYSDATE)

------------------------

2007

NULLIF

This function takes two parameters and compares the first parameter with the second parameter. If they are equal, then the function returns null otherwise it returns the first parameter.

SQL> select nullif(1, 2) from dual;

NULLIF(1,2)

-----------

1

SQL> select nullif(1, 1) from dual;

NULLIF(1,1)

-----------

REMAINDER

This function takes two numeric arguments (non-numeric values are implicitly converted to numeric values) and returns the remainder of expr2 divided by expr2.

SQL> select remainder(5624,54) from dual;

REMAINDER(5624,54)

------------------

8

Thanks for reading :-)

Thursday, August 23, 2007

To cache or not to cache an Oracle Sequence?

Last week, when I was reviewing AWR report of a busy OLTP database, I came across a dictionary related “Update” statement. This update statement was listed in top 10 SQL’s under “SQL ordered by Parse Calls” and “SQL ordered by Executions” sections of the AWR report and was parsed and executed 698 times during 30 minutes (A 30 minutes AWR report).

Here is the complete SQL Statement:

“update seq$ set increment$=:2, minvalue=:3, maxvalue=:4, cycle#=:5, order$=:6, cache=:7, highwater=:8, audit$=:9, flags=:10 where obj#=:1”

Immediately, I queried Oracle data dictionary for a list of sequences of one of our most important schema. Following is the query and its output:

SQL> select sequence_name, cache_size, last_number from dba_sequences where sequence_owner = 'PISYS'; SEQUENCE_NAME CACHE_SIZE LAST_NUMBER ------------------------------ ---------- ----------- SEQ_AUDIT_DETAILS 0 6728991 SEQ_TRANS_LOG 0 1 SEQ_BATCH_LOG 20 991Sequence “SEQ_AUDIT_DETAILS” seems to be a culprit here, it has been used for more than 6 Million times and is not cached. Upon further investigation, it was revealed that this sequence is used by an audit detail table to generate unique serial. I altered the sequence definition to cache 1000 values. Caching instructs Oracle to pre-allocate (in my case 1000) values in the memory for faster access.

SQL> alter sequence pisys.seq_audit_details cache 1000;The above ALTER command did a magic and the UPDATE statement vanished from the AWR reports. We need to cache sequences whenever possible to avoid extra physical I/O. Thanks for reading and comments are welcome.

Saturday, August 18, 2007

Is this a bug or an error?

Erik has posted really an interesting question on Laurent’s blog. It caught my attention and I thought of discussing it in greater detail over here.

What happens when you divide any number by a zero? You will end up with an error.

SQL> select 1/0 from dual; select 1/0 from dual * ERROR at line 1: ORA-01476: divisor is equal to zeroThat’s true! The SQL query ends up with ORA-01476 error. Now, what happens when I run this query? “select 1 from dual where exists (select 1/0 from dual)” Well, even this should result in an error. Ok, let’s run this query:

SQL> select 1 from dual where exists (select 1/0 from dual);

1

----------

1

SQL>

Stupid! Isn’t it?

The query executes successfully instead of returning an error. That should be a bug. Wait a minute! Before arriving at any conclusion let’s test it again, but this time in a different way.

I will create a function which prints a message on console and returns a value.

SQL> create or replace function myfunc return number is

2 begin

3 dbms_output.put_line('test function');

4 return(99);

5 end;

6 /

Function created.

SQL> set serveroutput on

SQL> select myfunc from dual;

FUNC

----------

99

test function

When I use the function in a query, it returns the value and also prints the message “test function”.

Now, let’s put the function in the subquery’s Where clause and see what happens.

SQL> select 1 from dual where exists (select * from dual where myfunc = 99); 1 ---------- 1 test functionThe function gets execute and the query returns the desired output. Now, let’s place the function in the subquery’s Select list instead of “1/0” and test the query.

SQL> select 1 from dual where exists (select myfunc from dual); 1 ---------- 1 SQL>As seen the query executes successfully but doesn’t print me the message “test function”. Why? The answer is again hidden in Oracle documentation. The documentation for EXISTS is as below: "An EXISTS condition tests for existence of rows in a subquery. It returns TRUE if a subquery returns at least one row.” It’s clear that Oracle simply tests for the existence of rows in a subquery and will simply ignore the select list. However, the select list goes through syntax and semantics checks. Oracle never fetches the columns listed in the select list and it’s logical also. The EXISTS condition has nothing to do with the select list rather its interest lies in existence of rows. This is the reason why “select 1 from dual where exists (select 1/0 from dual);” executes successfully. Thanks for reading ;-)

Friday, August 10, 2007

Oracle 11g Hits the Stands

Dear readers,

Oracle has released the Oracle Database 11g Release 1 (11.1.0.6.0)Standard Edition, Standard Edition One, and Enterprise Edition.

Documentation for Oracle 11g can be downloaded from here.

Regards

Saturday, August 04, 2007

25 Ways to Distinguish Yourself

Hello,

Lately, I have come across this beautiful document by Rajesh Shetty. The document is titled “25 ways to distinguish yourself”. In this document, Mr. Shetty discusses why and how to distinguish yourself. This document is extremely well-crafted and is really a must read document for every professional.

Thanks for reading :-))

Saturday, July 28, 2007

How to find Cluster Interconnect IP address from Oracle Database

Hello,

The easiest way to find the cluster interconnect is to view the “hosts” file. The “hosts” file is located under:

UNIX .......... /etc

Windows ...... C:\WINDOWS\system32\drivers\etc

Following are the ways to find the cluster interconnect through Oracle database:

1) Query X$KSXPIA

The following query provides the interconnect IP address registered with Oracle database:

SQL> select IP_KSXPIA from x$ksxpia where PUB_KSXPIA = 'N'; IP_KSXPIA ---------------- 192.168.10.11This query should be run on all instances to find the private interconnect IP address used on their respective nodes. 2) Query GV$CLUSTER_INTERCONNECTS view Querying GV$CLUSTER_INTERCONNECTS view lists the interconnect used by all the participating instances of the RAC database.

SQL> select INST_ID, IP_ADDRESS from GV$CLUSTER_INTERCONNECTS; INST_ID IP_ADDRESS ---------- ---------------- 1 192.168.10.11 2 192.168.10.123) ASM and Database log We can find out the cluster interconnect used by viewing the log file of both ASM instance and the database instance. When the ASM instance is started it registers the private interconnect used. Following is the excerpt from the ASM alert log on node 1:

: : Cluster communication is configured to use the following interface(s) for this instance 192.168.10.11 : :Similarly, the database instance also registers the private interconnect with its instance and following message is recorded in the alert log:

: : Cluster communication is configured to use the following interface(s) for this instance 192.168.10.11 : :4) CLUSTER_INTERCONNECT initialization parameter This parameter provides Oracle with information on the availability of additional cluster interconnects. It overrides the default interconnect settings at the OS level with a preferred one. You can view the current value of this parameter by issuing the following in SQL*Plus, connected as SYS user: SQL> show parameter cluster_interconect Thanks for reading :-)

Wednesday, July 25, 2007

A Nice and Handy UNIX Guide

Most of the times we struggle to find the equivalent commands on other Operating Systems, say from AIX to Linux. Normally, we google and after a hard work we ended up with one equivalent command.

I have found the following link to be of great help, it compares commands between AIX, HP-UX, Linux, Solaris, and Tru64.

http://unixguide.net/cgi-bin/unixguide.cgi

Now it’s easy to answer questions like “What is the equivalent command for on HP-UX for df -k on AIX??”

Thanks for reading :-))

Saturday, July 21, 2007

A closer look at "SYSDBA" and "DBA"

SYSDBA and DBA are often misunderstood. In short, SYSDBA is a system privilege whereas DBA is a role.

The DBA role does not include the SYSDBA or SYSOPER system privileges. SYSDBA and SYSOPER are special administrative privileges that allow an administrator to perform basic database administration tasks, such as creating the database, instance startup and shutdown, drop a database, open and mount a database, place database in archivelog mode or remove it from archivelog mode.

About SYSDBA system privilege

The SYSDBA and SYSOPER system privileges allow access to a database instance even when the database is not open. Control of these privileges is totally outside of the database itself. The SYSDBA and SYSOPER privileges can also be thought of as types of connections that enable you to perform certain database operations for which privileges cannot be granted in any other fashion. For example, if you have the SYSDBA privilege, you can connect to the database by specifying CONNECT AS SYSDBA.

Query users having SYSDBA or SYSOPER privileges:

SQL> column sysdba format a10 SQL> column sysoper format a10 SQL> select * from v$pwfile_users; USERNAME SYSDBA SYSOPER ------------------------------ ---------- ---------- SYS TRUE TRUE SCOTT TRUE FALSEAbout DBA Role A predefined role, named "DBA", is automatically created with every Oracle database. This role contains all database system privileges but SYSDBA and SYSOPER are excluded. Therefore, it is very powerful and should be granted only to fully functional database administrators. Query all roles that exist in the database:

SQL> select role from dba_roles; ROLE ------------------------------ CONNECT RESOURCE DBA : :Query all users to whom DBA role is granted:

SQL> select * from dba_role_privs where granted_role = 'DBA'; GRANTEE GRANTED_ROLE ADM DEF ------------------------------ ------------------------------ --- --- SYS DBA YES YES SYSMAN DBA NO YES LETS DBA NO YES SYSTEM DBA YES YESAdditionally, you may not grant SYSDBA privilege to a role. ORA-01931 error is raised when you try to grant it to a role.

SQL> grant sysdba to dba; grant sysdba to dba * ERROR at line 1: ORA-01931: cannot grant SYSDBA to a roleA database user can be granted both DBA and SYSDBA privilege.

SQL> select * from dba_role_privs where granted_role = 'DBA'; GRANTEE GRANTED_ROLE ADM DEF ------------------------------ ------------------------------ --- --- SYS DBA YES YES SCOTT DBA NO YES SYSMAN DBA NO YES LETS DBA NO YES SYSTEM DBA YES YESHappy reading !!!

Saturday, July 07, 2007

Eliminating Re-Creation of Control File when increasing the value of MAXDATAFILES parameter

With the enhancements in Oracle 10g Release 2, we can eliminate control file recreation for changing values of MAXLOGFILE, MAXLOGMEMBERS, MAXLOGHISTORY, MAXDATAFILES, and MAXINSTANCES parameters. In earlier versions of Oracle database, you have to recreate the controlfile in order to modify any of these parameters.

These parameters are defined at the time of database creation. MAXDATAFILES parameter indicates the maximum number of datafiles that a database can accommodate. This parameter also affects the size of the of the control file.

DB_FILES initialization parameter specifies the maximum number of database files that can be opened for the database. If you modify this parameter then the instance should be bounced for the new value to take effect.

I have specified MAXDATAFILES to be 20 when creating my test database and started my instance with DB_FILES=19. We can check the current value of MAXDATAFILES for the database by:

1) Creating a trace of the control file, and,

2) Querying the V$CONTROLFILE_RECORD_SECTION view.

Check the current value of DB_FILES initialization parameter of the instance:

SQL> show parameter db_files NAME TYPE VALUE ---------- ----------- ----------- db_files integer 20Query the current value of MAXDATAFILES parameter defined for the database:

SQL> select type, record_size, records_total, records_used from v$controlfile_record_section where type = 'DATAFILE'; TYPE RECORDS_TOTAL RECORDS_USED ---------------------------- ------------- ------------ DATAFILE 20 3 1 row selected.Alternatively, we can check the value of MAXDATAFILES by creating a trace of the control file.

SQL> alter database backup controlfile to trace as 'c:\testdb\control\control.txt'; Database altered.Open the file control.txt using any text editor and view the current setting for the MAXDATAFILES parameter. Let’s, find out how many datafiles exist in the database:

SQL> select count(*) from dba_data_files; COUNT(*) ---------- 3I will create another 17 tablespaces with 17 datafiles using the below script:

SQL> select 'create tablespace drop_ts' || rownum || 2 ' datafile ''c:\testdb\data\drop_ts' || rownum || 3 '.dbf'' size 10M;' txt 4 from all_objects where rownum <18; TXT ------------------------------------------------------------------------------- create tablespace drop_ts1 datafile 'c:\testdb\data\drop_ts1.dbf' size 10M; create tablespace drop_ts2 datafile 'c:\testdb\data\drop_ts2.dbf' size 10M; create tablespace drop_ts3 datafile 'c:\testdb\data\drop_ts3.dbf' size 10M; create tablespace drop_ts4 datafile 'c:\testdb\data\drop_ts4.dbf' size 10M; create tablespace drop_ts5 datafile 'c:\testdb\data\drop_ts5.dbf' size 10M; create tablespace drop_ts6 datafile 'c:\testdb\data\drop_ts6.dbf' size 10M; create tablespace drop_ts7 datafile 'c:\testdb\data\drop_ts7.dbf' size 10M; create tablespace drop_ts8 datafile 'c:\testdb\data\drop_ts8.dbf' size 10M; create tablespace drop_ts9 datafile 'c:\testdb\data\drop_ts9.dbf' size 10M; create tablespace drop_ts10 datafile 'c:\testdb\data\drop_ts10.dbf' size 10M; create tablespace drop_ts11 datafile 'c:\testdb\data\drop_ts11.dbf' size 10M; create tablespace drop_ts12 datafile 'c:\testdb\data\drop_ts12.dbf' size 10M; create tablespace drop_ts13 datafile 'c:\testdb\data\drop_ts13.dbf' size 10M; create tablespace drop_ts14 datafile 'c:\testdb\data\drop_ts14.dbf' size 10M; create tablespace drop_ts15 datafile 'c:\testdb\data\drop_ts15.dbf' size 10M; create tablespace drop_ts16 datafile 'c:\testdb\data\drop_ts16.dbf' size 10M; create tablespace drop_ts17 datafile 'c:\testdb\data\drop_ts17.dbf' size 10M; 17 rows selected. SQL>Querying the data dictionary shows that we have 20 datafiles in our database.

SQL> select count(*) from dba_data_files; COUNT(*) ---------- 20 1 row selected.Let us try to create one more tablespace and see what happens:

SQL> create tablespace drop_ts18 datafile 'c:\testdb\data\drop_ts18.dbf' size 10M; create tablespace drop_ts18 datafile 'c:\testdb\data\drop_ts18.dbf' size 10M * ERROR at line 1: ORA-00059: maximum number of DB_FILES exceededThe command returns with an error indicating that we have already reached the maximum number of datafiles and it cannot create new datafiles anymore. At this point, we have to shutdown the database, increase the value of DB_FILES initialization parameter, and start the database. Shut the database down:

SQL> shutdown immediate;I modify the DB_FILES parameter in my PFILE to 25 (If you are using SPFILE then create a PFILE) and start the database:

SQL> startup pfile=c:\testdb\pfile\initTESTDB.oraQuery the DB_FILES initialization parameter after instance startup:

SQL> show parameter db_files NAME TYPE VALUE ------------------------------------ ----------- ------------------------- db_files integer 25 SQL> select type, record_size, records_total, records_used 2 from v$controlfile_record_section 3 where type = 'DATAFILE'; TYPE RECORDS_TOTAL RECORDS_USED ---------------------------- ------------- ------------ DATAFILE 20 20Yeah, the new value is in effect. But querying the RECORDS_TOTAL column of V$CONTROLFILE_RECORD_SECTION view displays the maximum limit as 20. Let us try to create a new tablespace with one datafile and see what happens.

SQL> create tablespace drop_ts18 datafile 'c:\testdb\data\drop_ts18.dbf' size 10M; Tablespace created.Wow, the tablespace is created. Lets query again V$CONTROLFILE_RECORD_SECTION view:

SQL> select type, records_total, records_used 2 from v$controlfile_record_section 3 where type = 'DATAFILE'; TYPE RECORDS_TOTAL RECORDS_USED ---------------------------- ------------- ------------ DATAFILE 52 21The control file has automatically expanded to accommodate the new datafile information. The RECORDS_TOTAL column value has increased to 52 (20 original + 32 the default value) and the RECORDS_USED column is at 21 (meaning we have 21 datafiles in our database). The message recorded in the alert.log is :

create tablespace drop_ts18 datafile 'c:\testdb\data\drop_ts18.dbf' size 10M Tue Jul 03 12:46:15 2007 Expanded controlfile section 4 from 20 to 52 records Requested to grow by 32 records; added 1 blocks of records Completed: create tablespace drop_ts18 datafile 'c:\testdb\data\drop_ts18.dbf' size 10MNote: Temp files associated with temporary tablespace do not account for MAXDATAFILES limit. Regards

Saturday, June 30, 2007

“cursor: pin S wait on X” Wait Event in Oracle 10g Release 2

After a pleasant weekend, when I returned back to work I found one of our development databases on Oracle 10g was in a hung state. Later, upon investigation I found 23 sessions were waiting on “cursor: pin S wait on X” wait event.

Searching on Oracle Metalink, I came to know that this is a bug in Oracle 10.2.0.3 on all platforms. The note explains that this could happen when you typically use DBMS_STATS to gather statistics.

We had a job scheduled to run on the weekend to gather statistics. I identified the session which was gathering statistics and killed that session both from Oracle and OS. Soon after that everything was back to normal.

A patch is available on Oracle Metalink which addresses this issue.

References from Metalink:

Subject: 10.2.0.3 Patch Set - Availability and Known Issues

Note:401435.1

Last Revision Date: 14-JUN-2007

Subject: Bug 5907779 - Self deadlock hang on "cursor: pin S wait on X" (typically from DBMS_STATS)

Note:5907779.8

Last Revision Date: 10-APR-2007

Happy reading !!!

Wednesday, June 13, 2007

How to find my Windows is 32-bit or 64-bit?

How to find my Windows is 32-bit or 64-bit?

To find the answer to this question, follow this link

http://support.f-secure.com/enu/home/supportissue/fsis2007/before_install_q07.shtml

How to find the OS block size of my Windows OS?

Microsoft defines Cluster as “In data storage, the smallest amount of disk space that can be allocated to hold a file. All file systems used by Windows organize hard disks based on clusters, which consist of one or more contiguous sectors.”

To find the OS block size:

Right Click on “My Computer” icon on the desktop

Click on “Manage”

Expand “Storage” and select “Disk Defragmenter”

Press the “Analyze” button

Once your windows completes the analysis, it presents you with a report.

Press “View Report” button

The block size is indicated by “Cluster Size”. In my case it’s 4K.

How to set the OS block size on my Windows OS?

Open the Windows Explorer.

Right Click on your “C drive” and click on “Format”.

A window is presented with couple of options. The OS block size is displayed by a drop-down list labeled “Allocation unit size”.

How to set the OS block size on my Windows OS?

Open the Windows Explorer.

Right Click on your “C drive” and click on “Format”.

A window is presented with couple of options. The OS block size is displayed by a drop-down list labeled “Allocation unit size”.

How to set the OS block size on my Windows OS?

Open the Windows Explorer.

Right Click on your “C drive” and click on “Format”.

A window is presented with couple of options. The OS block size is displayed by a drop-down list labeled “Allocation unit size”.

How to set the OS block size on my Windows OS?

Open the Windows Explorer.

Right Click on your “C drive” and click on “Format”.

A window is presented with couple of options. The OS block size is displayed by a drop-down list labeled “Allocation unit size”.

Sunday, May 20, 2007

How We Upgraded our Oracle 9i Database to Oracle 10g Database with Near-Zero Downtime

Dear all,

My article has been accepted and is published under Metalink's Customer Knowledge Exchange Program. The title of my article is "How We Upgraded our Oracle 9i Database to Oracle 10g Database with Near-Zero Downtime" and the Metalink note id is 431430.1.

Abstract of the document:

An Oracle database upgrade is a process of transforming an existing Oracle database into a later or the current release of the Oracle Database. This upgrade process normally requires a significant amount of database down time. This task of upgrade becomes more difficult for businesses which need to be 24*7 operational and any outage negatively affects business operations and customer service. In 24*7 organizations like Banks the database availability is so crucial that the downtime is bargained in minutes.

Minimizing downtime is a challenge every organization faces. With the advent of Oracle Streams, this challenge merely remains a challenge. Oracle Streams replication enables us to perform an upgrade with near-zero downtime. This is accomplished by configuring Oracle Streams on the existing Oracle 9i database which captures all the changes on the source database and replicates the changes to the target database (Oracle 10g). The rest of the document presents a step-by-step approach of setting the Oracle Streams replication and performing an Upgrade to Oracle 10g database.

Have a look at it and pass your comments.

Happy reading :)

Updated: 14 May, 2008

Sunday, April 29, 2007

Killing Long Running Jobs

Hi all,

I have often seen DBA's bouncing their database to get rid of stubborn jobs. In this post, I describe a way to remove the long running jobs without bouncing your database.

First, we need to identify the long running jobs, once we know what jobs are stubborn, we will mark these jobs as 'BROKEN'. We then kill the sessions linked with the jobs, and optionally kill the OS processes associated with these sessions. Next, we mark the jobs back as 'UNBROKEN' and finally run the job.

Note: Before marking the jobs as Broken or killing the session, run the following SQL’s and store the output of each query.

1. Find all the Long Running Jobs

Use the following query to get a detailed information on long running jobs

select djr.Sid, dj.Log_User, dj.Job, dj.Broken, dj.Failures, dj.Last_Date, dj.Last_Sec, dj.This_Date, dj.This_Sec, dj.Next_Date, dj.Next_Sec, dj.Interval, dj.What from Dba_Jobs dj, Dba_Jobs_Running djr where dj.job = djr.job;2. Mark the Jobs as "BROKEN' Using the below query, I create a script to mark all the long running jobs as broken.

select ‘Exec DBMS_JOB.BROKEN(‘j.job ‘,TRUE);’ from dba_jobs dj, dba_jobs_running djr where dj.job = djr.job;3. Kill Oracle Sessions Using the below SQL query, you can kill all the sessions associated with the long running jobs.

select 'ALTER SYSTEM KILL SESSION ''' s.sid','s.serial#''';' from dba_jobs dj, dba_jobs_running djr, v$process p , v$session s where dj.job = djr.job and p.addr = s.paddr and djr.sid = s.sid;4. Kill the OS Sessions Optionally, you may kill the OS sessions to speedup PMON in cleaning the killed session undo data.

select 'Kill -9 ' p.spid from dba_jobs dj, dba_jobs_running djr, v$process p , v$session s where dj.job = djr.job and p.addr = s.paddr and djr.sid = s.sid;5. Mark the jobs as Unbroken Wait till all the sessions marked as ‘KILLED’ have been really killed and disappear from the V$SESSION view. Next, mark all the jobs as unbroken using the below query.

select ‘Exec DBMS_JOB.BROKEN(‘||j.job|| ‘,FALSE);’

from dba_jobs dj,

dba_jobs_running djr

where dj.job = djr.job;

6. Manually Run the jobs

Now that all the long running jobs have disappeared and its time to put the jobs back in the job queue.

select ‘Exec DBMS_JOB.RUN(‘||j.job|| ‘);’

from dba_jobs dj,

dba_jobs_running djr

where dj.job = djr.job;

7. Query ALL_JOBS views

Query the ALL_JOBS view to see that all the jobs are back in motion.

This way you will put the jobs back in trace without the need to recycle the database.

Happy reading !!!

Tuesday, April 03, 2007

Virtual Indexes in Oracle

In the world of virtualization, almost everything is getting virtualized like Virtual Machines, Virtual IP Address, and Virtual reality and so on. There’s one more addition to it “Virtual Indexes”. This is an undocumented feature of Oracle and the virtual indexes are also referred to as “Fake Indexes”.

Virtual Indexes are pseudo-indexes which do not exist as a segment in database. They can be used to test an index usage prior to actually creating one. Virtual indexes allows the CBO to evaluate the index for a SQL statement by building an explain plan that is aware of the new virtual index. This allows a DBA to answer “if an index in created would the optimizer actually use it?” question.

The virtual indexes are very helpful when you have to test against a table with huge data.

Creating a Virtual Index

Create a table using the following statement:

SQL> create table v_test as select object_id rno, object_name name from all_objects; Table created.You use the “NOSEGMENT” clause with the CREATE INDEX statement to create a Virtual Index.

SQL> create index v_ind on v_test(rno) NOSEGMENT; Index created.Query the USER_SEGMENTS view to verify whether the index segment is created or not.

SQL> select segment_name, bytes from user_segments where segment_name = 'V_IND'; no rows selected SQL> SQL> select segment_name, bytes from user_segments where segment_name = 'V_TEST'; SEGMENT_NAME BYTES --------------- ---------- V_TEST 196608As seen above, you have a segment created for the table but none for the index. Using of Virtual Indexes By setting the hidden parameter “_use_nosegment_indexes“, you inform the optimizer to use any underlying virtual indexes while generating an Explain Plan output. Note: Hidden parameters should be used after consulting Oracle Support.

SQL> alter session set "_use_nosegment_indexes" = true;Now, use the Explain Plan command to generate the plan using the following query:

SQL> explain plan for select * from v_test where rno = 5; Explained. PLAN_TABLE_OUTPUT ----------------------------------------------------------------------------------------- Plan hash value: 1019049159 -------------------------------------------------------------------------------------- Id Operation Name Rows Bytes Cost (%CPU) Time -------------------------------------------------------------------------------------- 0 SELECT STATEMENT 1 30 5 (0) 00:00:01 1 TABLE ACCESS BY INDEX ROWID V_TEST 1 30 5 (0) 00:00:01 * 2 INDEX RANGE SCAN V_IND 17 1 (0) 00:00:01 --------------------------------------------------------------------------------------It is evident from the above explain plan output that our newly created virtual index can benefit us. It is also clear that the CBO knows about the virtual index existence. Now, let us trace our SQL and find out whether the virtual index will actually be used by the CBO to fetch the data. I will use both 10046 and 10053 extended trace events to trace the SQL. Tracing using 10046 To differentiate my trace file from other existing trace files and to easily identify the trace file, I set tracefile_identifier parameter for my current session, then enable the tracing, run the SQL statement and turn off the tracing as shown below:

SQL> alter session set tracefile_identifier='T10046'; SQL> alter session set events '10046 trace name context forever, level 12'; SQL> select * from v_test where rno =5; SQL> alter session set events '10046 trace name context off'; SQL> exitI have pasted the section of the trace file in which we are interested i.e., the Execution Plan. Following is the excerpt of the trace file:

================================================= : : WAIT #1: nam='SQL*Net message from client' ela= 2275 driver id=1413697536 #bytes=1 p3=0 obj#=-1 tim=906714236 STAT #1 id=1 cnt=1 pid=0 pos=1 obj=10769 op='TABLE ACCESS FULL V_TEST (cr=39 pr=0 pw=0 time=69 us)' WAIT #0: nam='SQL*Net message to client' ela= 1 driver id=1413697536 #bytes=1 p3=0 obj#=-1 tim=906718527 : : =================================================From the above report it’s clear that CBO is using a “FULL TABLE SCAN” on V_TEST to execute the query. The CBO is intelligent enough to discard the fake index and look for an otherwise optimal plan. Tracing using 10053 Similarly, I trace the same SQL statement using the 10053 extended trace.

SQL> alter session set tracefile_identifier=’T10053’; SQL> alter session set events '10053 trace name context forever, level 1'; SQL> alter session set "_use_nosegment_indexes" = true; SQL> select * from v_test where rno = 5; SQL> alter session set events '10053 trace name context off'; SQL> exitIt is evident from the trace output shown below, that the CBO is opting for a “FULL TABLE SCAN” as it is aware that V_IND is a virtual index with no segments.

============

Plan Table

============

-------------------------------------+-----------------------------------+

Id Operation Name Rows Bytes Cost Time

-------------------------------------+-----------------------------------+

0 SELECT STATEMENT 11

1 TABLE ACCESS FULL V_TEST 1 21 11 00:00:01

-------------------------------------+-----------------------------------+

Predicate Information:

----------------------

1 - filter("RNO"=5)

Conclusion:

As I mentioned earlier, this is undocumented, so use it at your own risk. Virtual indexes should be used when testing queries before creating an index on a huge table as opposed to creating a real index which might take hours depending on the size of the data.

When using the Explain plan, CBO tests the virtual index usage and will let you know whether it will be fruitful to create an index or not. But while actually executing the query it will opt for an optimal execution plan after discarding the virtual index.

Regards

Wednesday, March 14, 2007

"TCP Socket (KGAS)" Wait Event

We recently had this issue in our organization. Suddenly our Oracle Database (Oracle Database 10g Enterprise Edition Release 10.2.0.2.0 on AIX 5.3) was experiencing waits on "TCP Socket (KGAS)" event. Upon investigation, we found out that it was because the mail server was down and the procedure tries to open a new connection with the SMTP server and it takes 75 seconds to timeout on IBM-AIX. (On Windows 2003, the default timeout is 20 seconds.)

There is no way of controlling timeout from within Oracle. When you pass the timeout parameter while opening connection with the SMTP Server, it doesn’t mean that it will timeout after the specified time rather it means that the subsequent Read/Write operations will timeout.

mail_conn := Utl_Smtp.open_connection(mailhost,MAILPORT, 10);

From above, it will take 10 seconds timeout time for the Read/Write operations after establishing the connection.

The only way to reduce the timeout from 75 seconds is to set TCP Keepalive parameters at OS level. It’s nice that we can still control it but at an expense of affecting all OS-level TCP connection.

Following parameters can be used to control the timeout in IBM-AIX:

1) net.ipv4.tcp_keepalive_time (seconds)

2) net.ipv4.tcp_keepalive_intvl (seconds)

3) net.ipv4.tcp_keepalive_probes

Be careful when setting the above parameters, as this will have a Global affect. Every new connection will be affected with the new timeout time. Now we are able to:

1) Control timeout while opening connection with the SMTP server using OS-level parameters, and

2) Pass a timeout in seconds for the subsequent Read/Write operations.

Are we done with our job? No, not yet!

What if the mail server goes down soon after establishing a connection and before starting the Read/Write operation, I mean during the handshake and Email verification procedures?

What happens is that, the sessions keep waiting forever for the SMTP server’s reply. Unfortunately, you have to locate and kill them.

We haven’t tried this ourselves but I have learned that using third party Java tool we can control the entire timeout issue.

Following is the procedure we use to send emails:

Procedure Test_Mail(

sender IN VARCHAR2, -- Mail Sender's Name

recipient IN VARCHAR2, -- Primary Mail ID of the Recipient

P_MESSAGE IN LONG, -- Any mail message

mailhost IN VARCHAR2, -- Mail host (IP Address)

MAILPORT IN NUMBER -- Port Number of the Mail Host

)

IS

mail_conn Utl_Smtp.connection;

v_mail_reply Utl_Smtp.reply;

BEGIN

mail_conn := Utl_Smtp.open_connection(mailhost,MAILPORT, 10); -- Timeout after 20 seconds

Utl_Smtp.helo(mail_conn, mailhost);

Utl_Smtp.mail(mail_conn, sender);

v_mail_reply := utl_smtp.vrfy(mail_conn, recipient);

MAIL_REPLY_CODE := v_mail_reply.code ;

IF v_mail_reply.code <> 550 THEN

Utl_Smtp.rcpt(mail_conn, recipient);

utl_smtp.open_data(mail_conn);

utl_smtp.write_data( mail_conn, 'MIME-version: 1.0' utl_tcp.CRLF);

utl_smtp.write_data( mail_conn, 'Content-TYPE: text/plain; charset=Arabic(Windows)' utl_tcp.CRLF);

utl_smtp.write_data( mail_conn, 'Content-Transfer-Encoding: 8bit' utl_tcp.CRLF);

utl_smtp.write_raw_data(mail_conn, utl_raw.cast_to_raw(mesgUTL_TCP.CRLF));

utl_smtp.close_data(mail_conn);

Utl_Smtp.quit(mail_conn);

END IF ;

EXCEPTION

WHEN OTHERS THEN

Proc_Execlog(' Error in Test_mail :' SQLERRM);

Utl_Smtp.quit(mail_conn);

END;

Regards

Profiling your PL/SQL code with DBMS_PROFILER

The PL/SQL profiler provides information about PL/SQL code with regard to CPU usage and other resource usage information.

When there is a noticeable gap between user elapsed time and SQL processing elapsed time, and there is PL/SQL code involved, the PL/SQL Profilerbecomes a very useful tool. The Profiler helps in identifying the lines of PL/SQL code which are taking longer to process.

The profiler report consists of

1) Top Ten profiled source lines in terms of Total Time

2) Total Time taken to process each line of your PL/SQL code.

3) Number of times each line executed in your PL/SQL code.

Installation Procedure:

1) Connect as SYS into SQL*Plus, and execute the below command to create the package (DBMS_PROFILER):

SQL> @ORACLE_HOME/rdbms/admin/profload.sql;

2) Once DBMS_PROFILER is installed, connect as application user into SQL*Plus, and create the following repository tables:

3) Generating a report

The script profiler.sql (available on Metalink, Doc Id: 243755) generates a comprehensive HTML report on the performance data extracted by the DBMS_PROFILER package.

Useful Procedures in DBMS_PROFILER

In Oracle 10g, the DBMS_PROFILER has more than 15 procedures/functions, but we will restrict to the following: .

.

Using DBMS_Profiler: To profile a PL/SQL Library (package, procedure, function or trigger), include in its body the two calls to actually start, and complete the profiling. Use the example below on any PL/SQL Library to profile.

BEGIN

DBMS_PROFILER.START_PROFILER('any comment to identify this execution');

... /* Your PL/SQL Code */ ...

DBMS_PROFILER.FLUSH_DATA;

DBMS_PROFILER.STOP_PROFILER;

END;

/

Example Usage of DBMS_PROFILER

Create the following test procedure, execute the procedure and run the prolifer.sql script to produce the report.

create or replace procedure test_proc is

u_cnt number;

a_cnt number;

run_id number;

a number;

b number;

begin

run_id := dbms_profiler.start_profiler(to_char(sysdate,'DD-MM-YYYY HH24:MI:SS'));

dbms_output.put_line(' RUN Id: ' run_id);

select count(*) into u_cnt from user_objects;

select count(*) into a_cnt from all_objects;

for i in 1..10000 loop

a := i;

b := a;

end loop;

dbms_output.put_line('User count: ' u_cnt);

dbms_output.put_line('All count: ' a_cnt);

dbms_profiler.flush_data;

dbms_profiler.stop_profiler;

end;

/

SQL> Exec Test_Proc;

SQL> c:\profiler\profiler.sql

Below is an excerpt from the report for the above procedure:

The profiler report consists of

1) Top Ten profiled source lines in terms of Total Time

2) Total Time taken to process each line of your PL/SQL code.

3) Number of times each line executed in your PL/SQL code.

Installation Procedure:

1) Connect as SYS into SQL*Plus, and execute the below command to create the package (DBMS_PROFILER):

SQL> @ORACLE_HOME/rdbms/admin/profload.sql;

2) Once DBMS_PROFILER is installed, connect as application user into SQL*Plus, and create the following repository tables:

- PLSQL_PROFILER_RUNS,

- PLSQL_PROFILER_UNITS, and

- PLSQL_PROFILER_DATA.

3) Generating a report

The script profiler.sql (available on Metalink, Doc Id: 243755) generates a comprehensive HTML report on the performance data extracted by the DBMS_PROFILER package.

Useful Procedures in DBMS_PROFILER

In Oracle 10g, the DBMS_PROFILER has more than 15 procedures/functions, but we will restrict to the following:

.

.Using DBMS_Profiler: To profile a PL/SQL Library (package, procedure, function or trigger), include in its body the two calls to actually start, and complete the profiling. Use the example below on any PL/SQL Library to profile.

BEGIN

DBMS_PROFILER.START_PROFILER('any comment to identify this execution');

... /* Your PL/SQL Code */ ...

DBMS_PROFILER.FLUSH_DATA;

DBMS_PROFILER.STOP_PROFILER;

END;

/

Example Usage of DBMS_PROFILER

Create the following test procedure, execute the procedure and run the prolifer.sql script to produce the report.

create or replace procedure test_proc is

u_cnt number;

a_cnt number;

run_id number;

a number;

b number;

begin

run_id := dbms_profiler.start_profiler(to_char(sysdate,'DD-MM-YYYY HH24:MI:SS'));

dbms_output.put_line(' RUN Id: ' run_id);

select count(*) into u_cnt from user_objects;

select count(*) into a_cnt from all_objects;

for i in 1..10000 loop

a := i;

b := a;

end loop;

dbms_output.put_line('User count: ' u_cnt);

dbms_output.put_line('All count: ' a_cnt);

dbms_profiler.flush_data;

dbms_profiler.stop_profiler;

end;

/

SQL> Exec Test_Proc;

SQL> c:\profiler\profiler.sql

Below is an excerpt from the report for the above procedure: